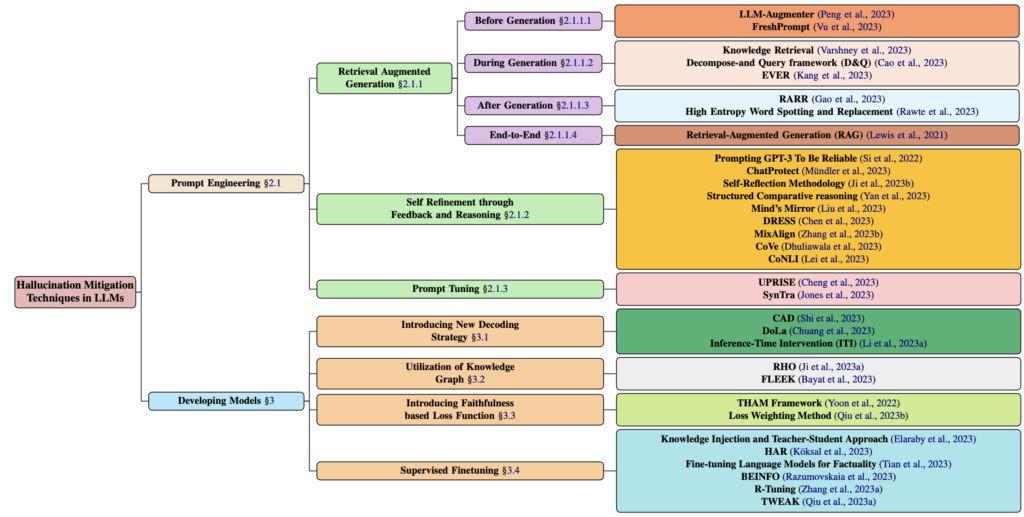

A Comprehensive Survey of Hallucination Mitigation Techniques in Large Language Models

This paper highlights 32 techniques to mitigate hallucination in LLMs, including a well-defined taxonomy to categorize these methods.

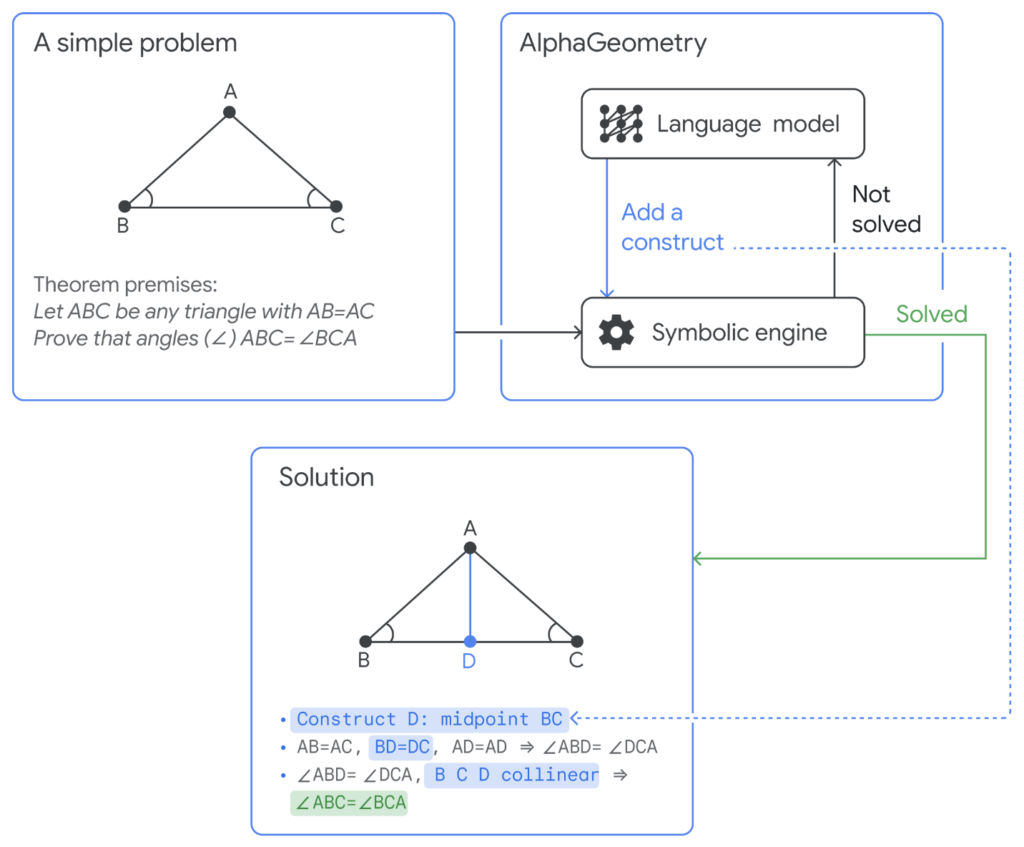

AlphaGeometry: An Olympiad-level AI system for geometry

DeepMind introduced its AI system for solving Olympiad geometry problems. Trained exclusively on synthetic data, AlphaGeometry uses a neuro-symbolic approach, and consists of two components:

- Neural language model for predicting geometry constructions

- Symbolic deduction engine that deduces conclusions through logical rules

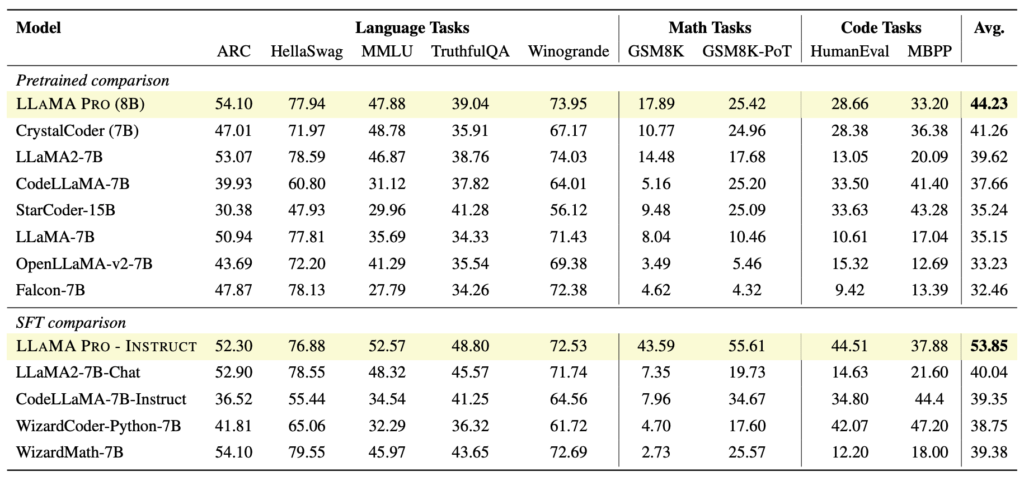

LLAMA PRO: Progressive LLaMA with Block Expansion

The researchers propose a new LLM post-pretraining method that expands the Transformer blocks, and then tunes these expanded blocks with only new data. They generate a new model called LLAMA PRO-8.3B from LLaMA2- 7B and demonstrate that this model, along with its ‘Instruct’ version, outperforms open LLaMA models.

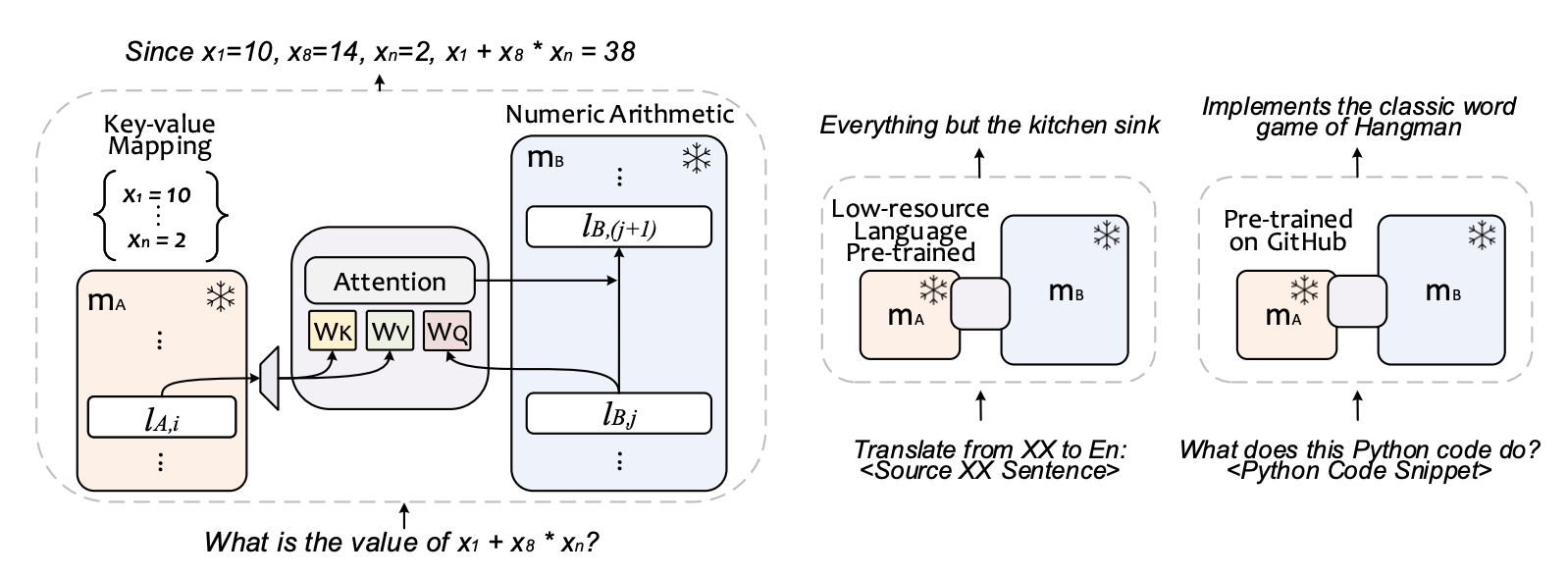

LLM Augmented LLMs: Expanding Capabilities Through Composition

Google researchers propose a new innovation called CALM (Composition to Augment Language Models) to compose representations that enable new capabilities in existing LLMs. The paper also cites a couple of examples to make their point:

- augmenting PaLM2-S with a smaller model trained on low-resource languages improved certain translation and arithmetic reasoning tasks.

- augmenting PaLM2-S with a code-specific model led to a 40% improvement of 40% for code generation.

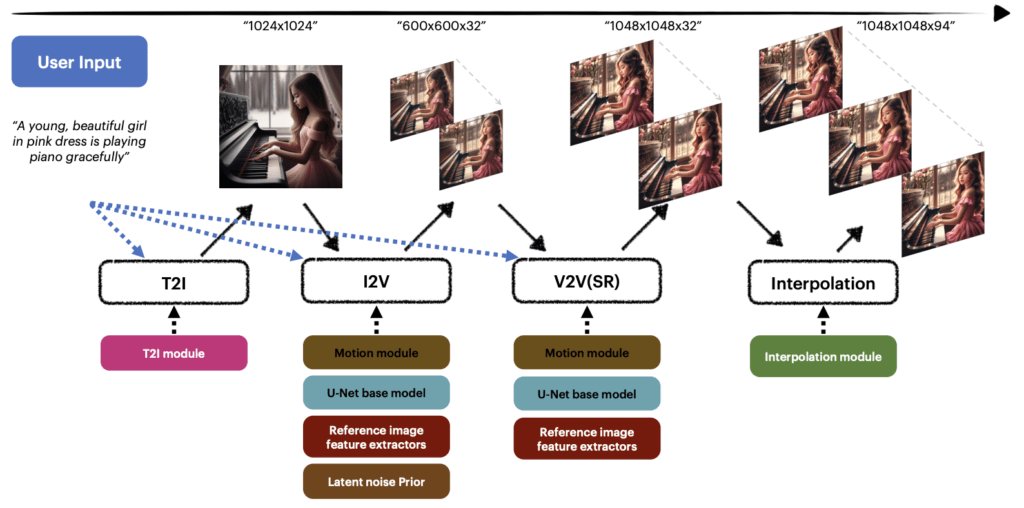

MagicVideo-V2: Multi-Stage High-Aesthetic Video Generation

ByteDance introduced this multi-stage text-to-video model, which integrates text-to-image, image-to-video, video-to-video, and video frame interpolation modules into an end-to-end video generation pipeline. The paper claims that this model outperforms peer models such as Moon Valley, Morph, Pika 1.0, Runway, and Stable Video Diffusion.

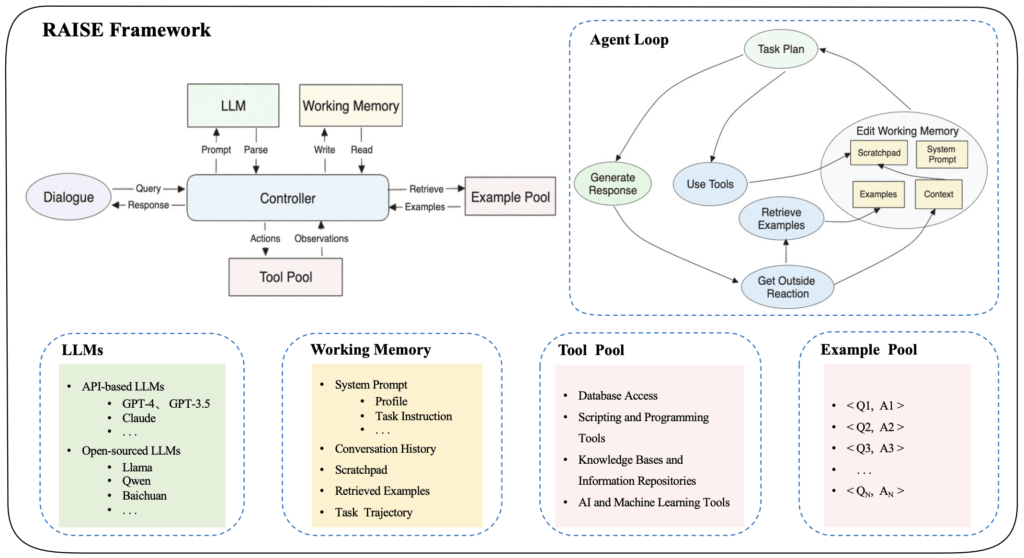

This paper introduces a new architecture called RAISE (Reasoning & Acting through Scratchpad & Examples) to enhance the integration of LLMs into conversational agents. Built as an enhancement of the ReAct framework, RAISE incorporates a dual-component memory system (similar to short-term and long-term memory in humans) to maintain context and continuity in conversation.

Chain-of-Table: Evolving Tables in the Reasoning Chain for Table Understanding

This paper proposes a new framework for table-based reasoning – an emerging area of research in which tabular structures are used to express intermediate thoughts in the LLM reasoning process. Their Chain-of-Table model enables LLMs to dynamically plan operation chains based on the input tables, and associated questions, thereby generating more accurate and reliable predictions.

DocLLM: A layout-aware generative language model for multimodal document understanding

JP Morgan researchers propose DocLLM, a lightweight extension to standard LLMs, to improve reasoning over visual documents commonly found in companies (e.g., forms, invoices, receipts, reports, contracts, etc.). The model leverages bounding-box information to encode spatial layout structures and subsequently captures the cross-alignment between text and spatial modalities. While the technical innovation itself may not be path-breaking, it does offer great potential in the applied AI space.

Self-Play Fine-Tuning Converts Weak Language Models to Strong Language Models

This paper proposes a new fine-tuning method called SPIN (Self-Play fIne-tuNing), in which an LLM refines its capability by playing against instances of itself. The LLM generates its own training data from previous iterations, and then refines its policy by comparing its responses with those obtained from human-annotated data. The researchers also claim that SPIN outperforms models trained through Direct Preference Optimization supplemented with extra GPT-4 preference data.

Other Notable Papers:

- A Survey of Resource-efficient LLM and Multimodal Foundation Models: https://arxiv.org/pdf/2401.08092v1

- Diffuse to Choose: Enriching Image Conditioned Inpainting in Latent Diffusion Models for Virtual Try-All: https://arxiv.org/abs/2401.13795

- How Johnny Can Persuade LLMs to Jailbreak Them: Rethinking Persuasion to Challenge AI Safety by Humanizing LLMs: https://arxiv.org/abs/2401.06373

- If LLM Is the Wizard, Then Code Is the Wand: A Survey on How Code Empowers Large Language Models to Serve as Intelligent Agents: https://arxiv.org/abs/2401.00812

- Instruct-Imagen: Image Generation with Multi-modal Instruction: https://arxiv.org/abs/2401.01952